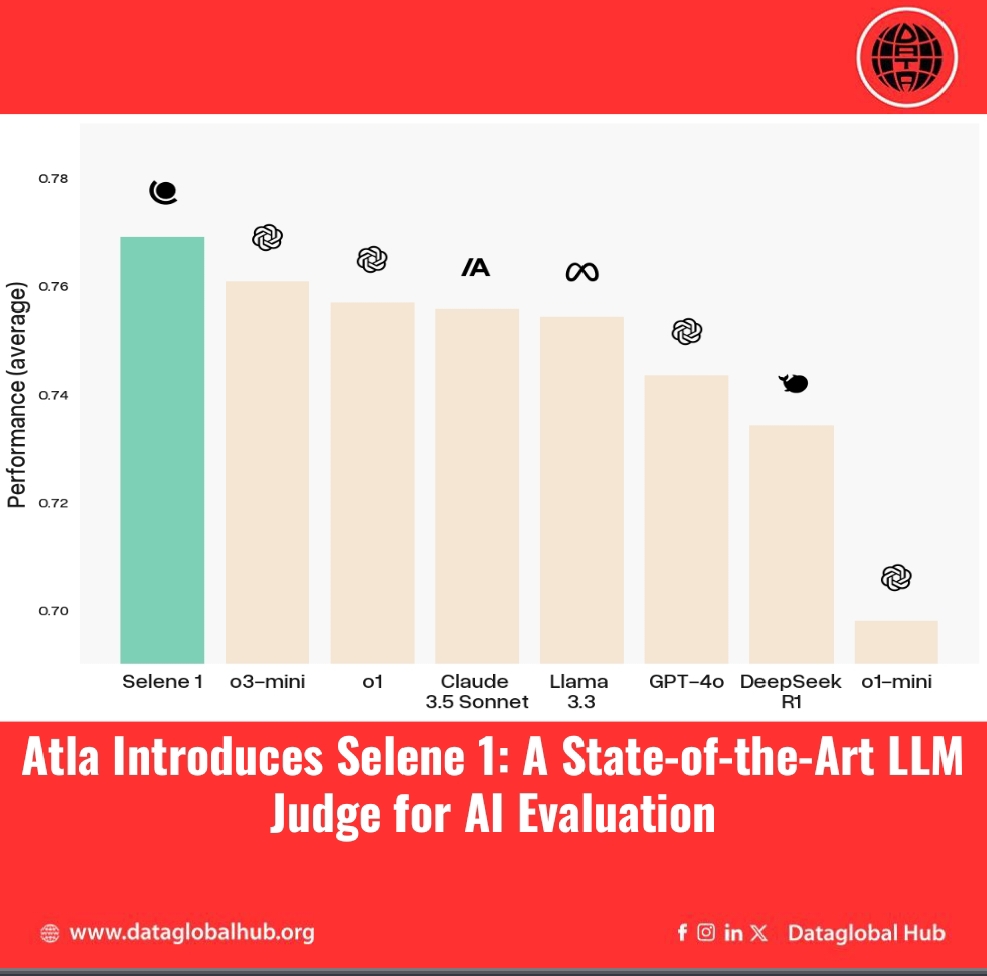

Atla Introduces Selene 1: A State-of-the-Art LLM Judge for AI Evaluation.

Atla has introduced Selene 1, a cutting-edge LLM Judge designed specifically to evaluate generative AI responses. Selene 1 achieves state-of-the-art performance across 11 commonly used benchmarks for AI evaluation, outperforming models including OpenAI's o-series, Anthropic's Claude 3.5 Sonnet, and DeepSeek's R1 on average. This makes it one of the most advanced tools available for assessing AI-generated content.

A General-Purpose Evaluator with Strong Performance

Selene 1 excels in a variety of evaluation tasks, including:

It backs up its evaluations with chain-of-thought reasoning, providing actionable critiques that enhance transparency and usability. Selene 1 can be applied to tasks such as detecting hallucinations in retrieval-augmented generation (RAG) systems, assessing logical reasoning in AI agents, and verifying correctness in domain-specific applications. It supports both reference-based and reference-free evaluation, making it a flexible tool for different use cases.

Fine-Grained Steering and Customization

Selene 1 is designed to be highly customizable, responding well to fine-grained instructions that allow users to adjust evaluation criteria according to specific needs. Atla has also introduced the Alignment Platform, a tool that simplifies the creation and refinement of custom evaluation metrics. Users can describe their task, and the platform assists in generating and testing tailored evaluation prompts, requiring little to no prompt engineering.

Benchmark Performance Highlights

Selene 1 delivers state-of-the-art performance across key benchmarks, including:

Integration and Accessibility

Selene 1 is designed for seamless integration into existing AI evaluation workflows, offering:

Atla’s release of Selene 1 offers a robust, customizable, and high-performance solution for developers and researchers focused on improving the reliability of AI-generated content.

About the Author

Amira Hassan

Amira Hassan is an AI news correspondent from Egypt

Subscribe to Newsletter

Enter your email address to register to our newsletter subscription!