HunyuanCustom: A Multimodal Framework for Customized Video Generation

Translate this article

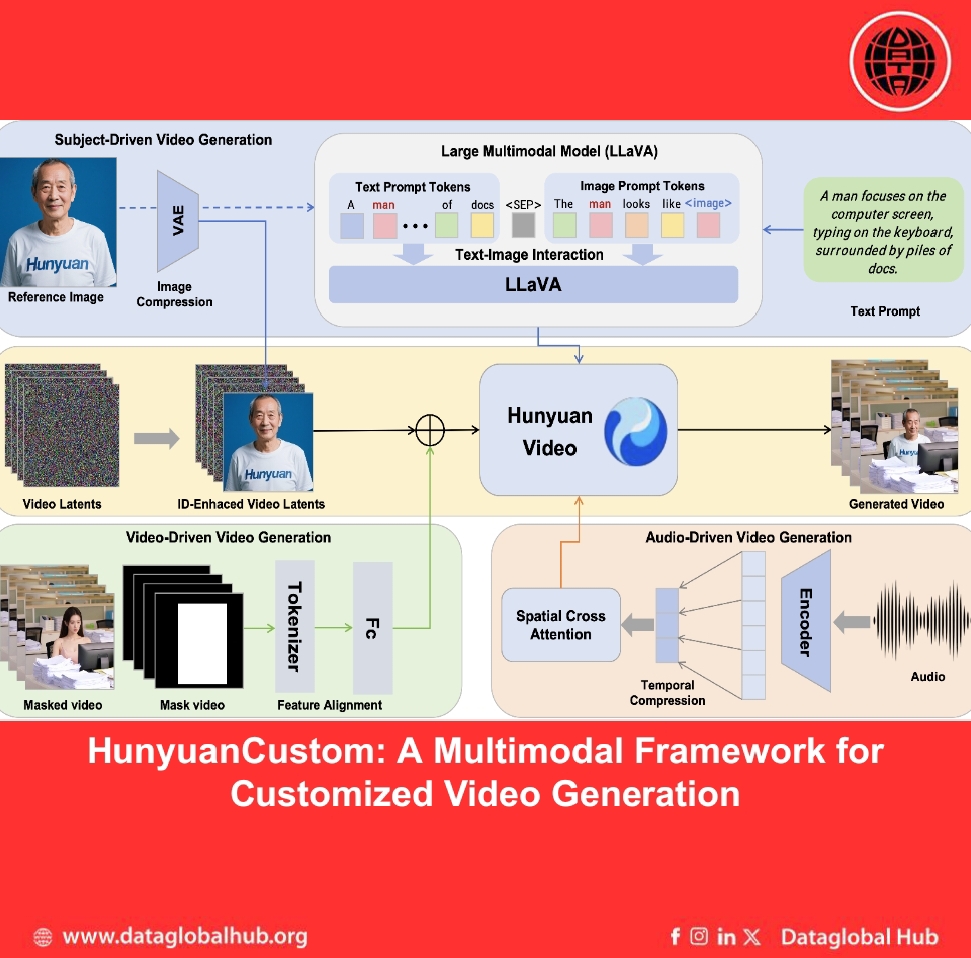

Creating personalized videos that preserve consistent subject identity across various contexts remains a significant challenge in artificial intelligence. Tencent’s HunyuanCustom, built on the HunyuanVideo platform, introduces a multimodal framework that enables users to generate subject-consistent videos using text, images, audio, and video inputs. Through careful integration of these modalities, the model enhances identity preservation, realism, and alignment with user-specified conditions. Whether it's a girl playing with plush toys or a man interacting with a penguin, HunyuanCustom enables coherent video generation guided by detailed user prompts.

Technical Framework

HunyuanCustom employs several architectural innovations to support flexible and controllable video generation:

Modality-Specific Injection Modules:

Applications

HunyuanCustom supports a wide range of applications across single-subject, multi-subject, and audio/video-driven tasks:

Performance Evaluation

Through experiments across various scenarios, HunyuanCustom has demonstrated competitive performance when compared with both open- and closed-source solutions. Evaluation metrics confirm its strengths in:

While further benchmarking and peer-reviewed results are pending, initial findings suggest that HunyuanCustom is a strong candidate in the space of controllable video generation.

About the Author

Eva Rossi

Eva Rossi is an AI news correspondent from Italy.

Recent Articles

Subscribe to Newsletter

Enter your email address to register to our newsletter subscription!