Introducing Mercury: A Faster, More Efficient Approach to Language Models.

Translate this article

Inception Labs has unveiled Mercury, a new family of diffusion-based large language models (dLLMs) designed to address the speed and cost limitations of traditional autoregressive models. Unlike conventional LLMs, which generate text sequentially, Mercury uses a diffusion process to refine outputs in parallel, enabling faster generation and improved error correction process.

Key Features

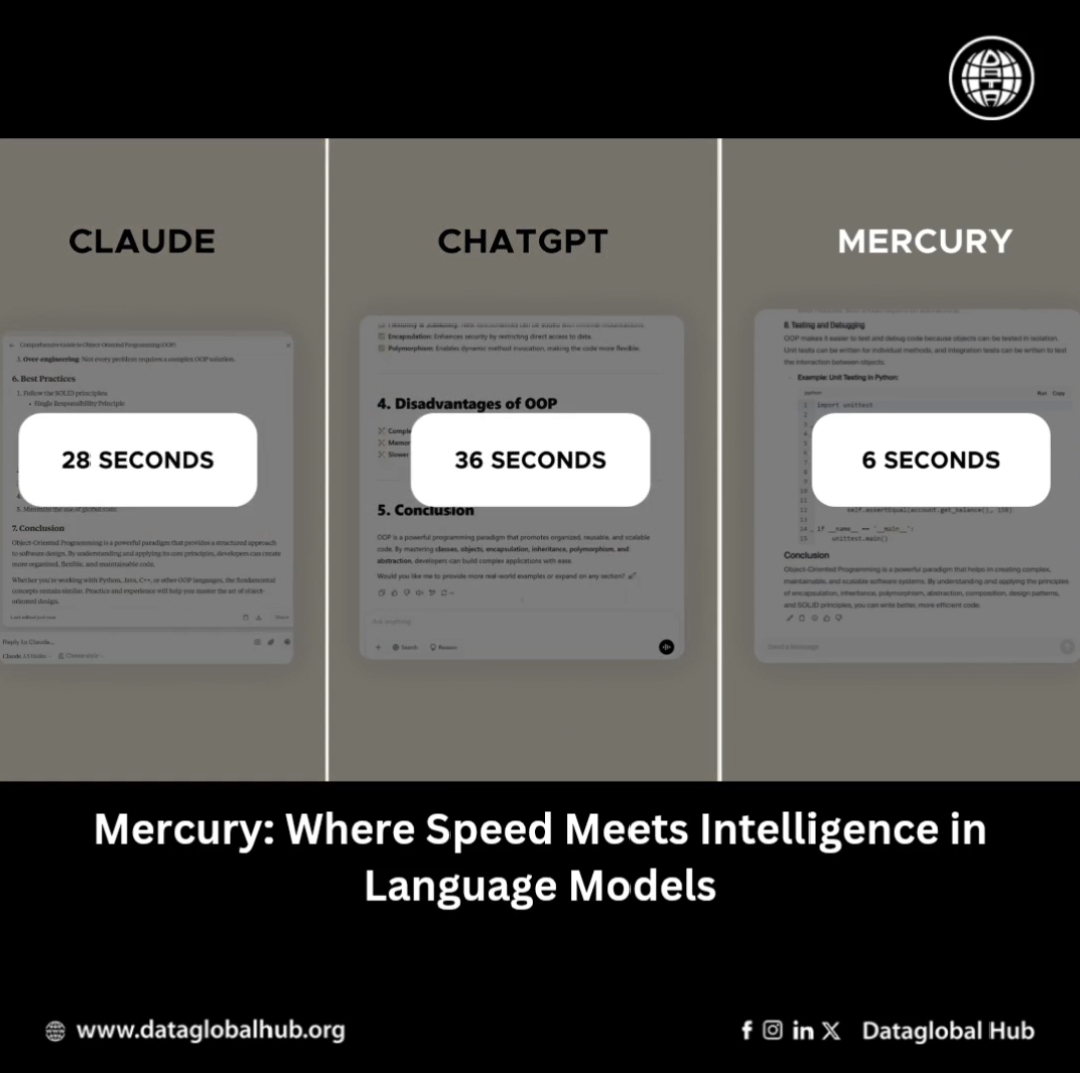

1. Speed: Mercury generates text at over 1000 tokens per second on NVIDIA H100 GPUs, significantly surpassing some frontier autoregressive models, which can run at less than 50 tokens per second. A speed previously possible only using custom chips.

2. Efficiency: Mercury’s optimized computation reduces inference costs, enabling real-time applications like code generation and customer support without prohibitive latency.

3. Quality: Mercury Coder, the first publicly available dLLM, delivers high-quality performance on coding benchmarks like HumanEval and MBPP, often matching or exceeding the quality of speed-optimized models while being up to 10x faster.

4. Compatibility: Mercury Coder is designed as a drop-in replacement for existing LLMs, supporting workflows like retrieval-augmented generation (RAG), tool use, and agentic systems without requiring significant infrastructure changes.

Mercury Coder: Optimized for Code Generation

Mercury Coder is specifically tailored for coding tasks, delivering high-quality code completions and edits at unprecedented speeds. Benchmarks show it outperforms models like GPT-4o Mini and Gemini-1.5-Flash in speed, while performing competitively in accuracy, making it a strong candidate for developers seeking faster, more efficient tools.

Practical Applications

Mercury’s speed and efficiency make it well-suited for a range of use cases:

About the Author

Mia Cruz

Mia Cruz is an AI news correspondent from United States of America.

Recent Articles

Subscribe to Newsletter

Enter your email address to register to our newsletter subscription!