Microsoft Releases VibeVoice: An Open-Source TTS Model for Multi-Speaker Conversational Audio

Translate this article

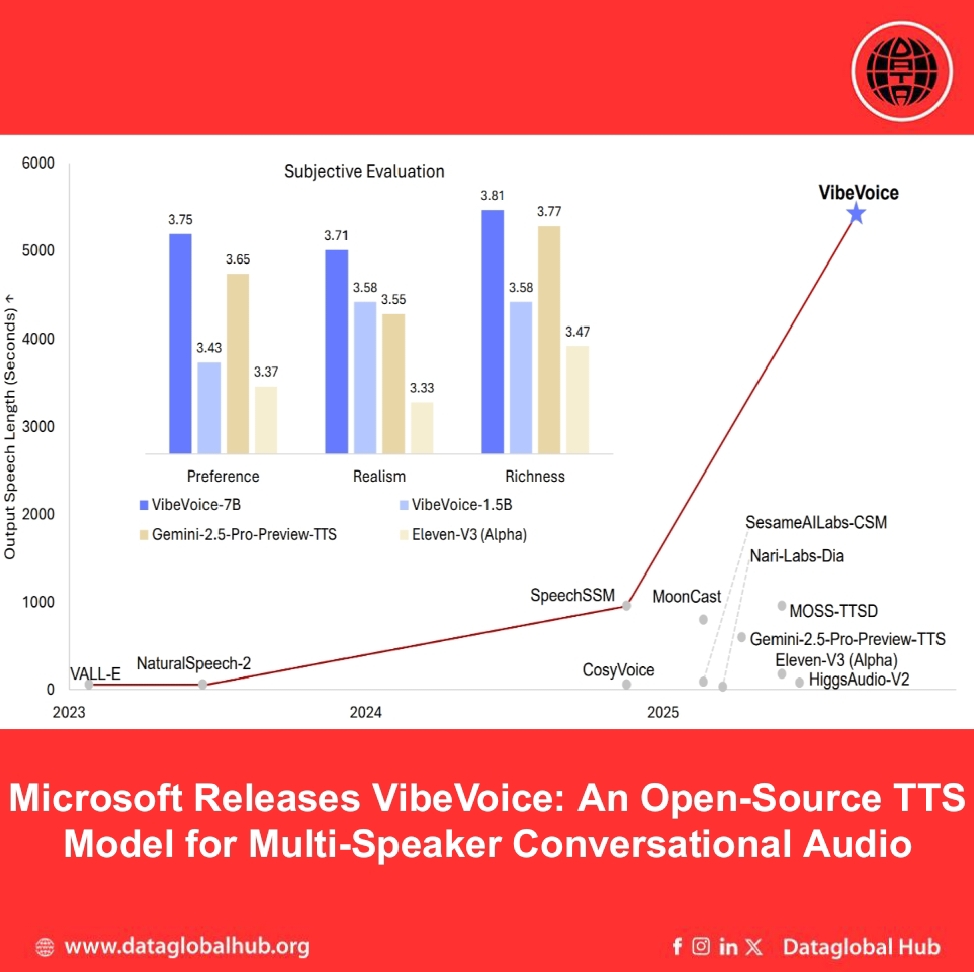

Microsoft Research has introduced VibeVoice, an open-source framework aimed at generating expressive, long-form, multi-speaker conversational audio from text. This model is specifically designed for scenarios like podcasts, tackling issues in traditional Text-to-Speech (TTS) systems such as scalability, speaker consistency, and natural turn-taking.

Core Innovations

VibeVoice incorporates continuous speech tokenizers both acoustic and semantic that operate at a frame rate of 7.5 Hz. These tokenizers maintain audio fidelity while improving computational efficiency for handling extended sequences. The model uses a next-token diffusion framework, which includes a Large Language Model (LLM) to process textual context and dialogue flow, paired with a diffusion head for producing detailed acoustic output.

According to the details provided, VibeVoice can generate speech up to 90 minutes in length involving up to four distinct speakers. This capability extends beyond the common limitations of prior models, which often support only one or two speakers.

Training and Architecture Details

The model is built around a transformer-based LLM integrated with acoustic and semantic tokenizers and a diffusion-based decoding head.

LLM: Utilizes Qwen2.5-1.5B for this release.

The context length is trained progressively up to 65,536 tokens. Training occurs in stages: first, the acoustic and semantic tokenizers are pre-trained separately; then, during VibeVoice training, these tokenizers are frozen, and only the LLM and diffusion head are trained. A curriculum learning approach scales input sequence lengths from 4k to 16k, 32k, and finally 64k tokens. The text tokenizer aligns with the LLM's default, while audio is handled by the acoustic and semantic tokenizers.

Intended Uses and Restrictions

VibeVoice is restricted to research purposes focused on highly realistic audio dialogue generation, as outlined in the technical report.

Out-of-scope applications include any uses that violate laws or regulations, including trade compliance, or breach the MIT License. It is not for generating text transcripts. Prohibited scenarios encompass:

Risks, Limitations, and Recommendations

The model may produce unexpected, biased, or inaccurate outputs, inheriting any biases, errors, or omissions from its base LLM (Qwen2.5-1.5B). Key risks include the potential for deepfakes and disinformation, where high-quality synthetic speech could be misused for impersonation, fraud, or spreading false information. Users are advised to verify transcript reliability, ensure content accuracy, and avoid misleading applications.

Additional limitations:

To address misuse risks, the following measures are implemented:

Users must source datasets legally and ethically, including securing rights and anonymizing data as needed, while considering privacy concerns. It is recommended to disclose AI usage when sharing generated content.

About the Author

Liang Wei

Liang Wei is our AI correspondent from China

Recent Articles

Subscribe to Newsletter

Enter your email address to register to our newsletter subscription!