Mistral Small 3.1 Raises the Bar for Lightweight AI Models.

Translate this article

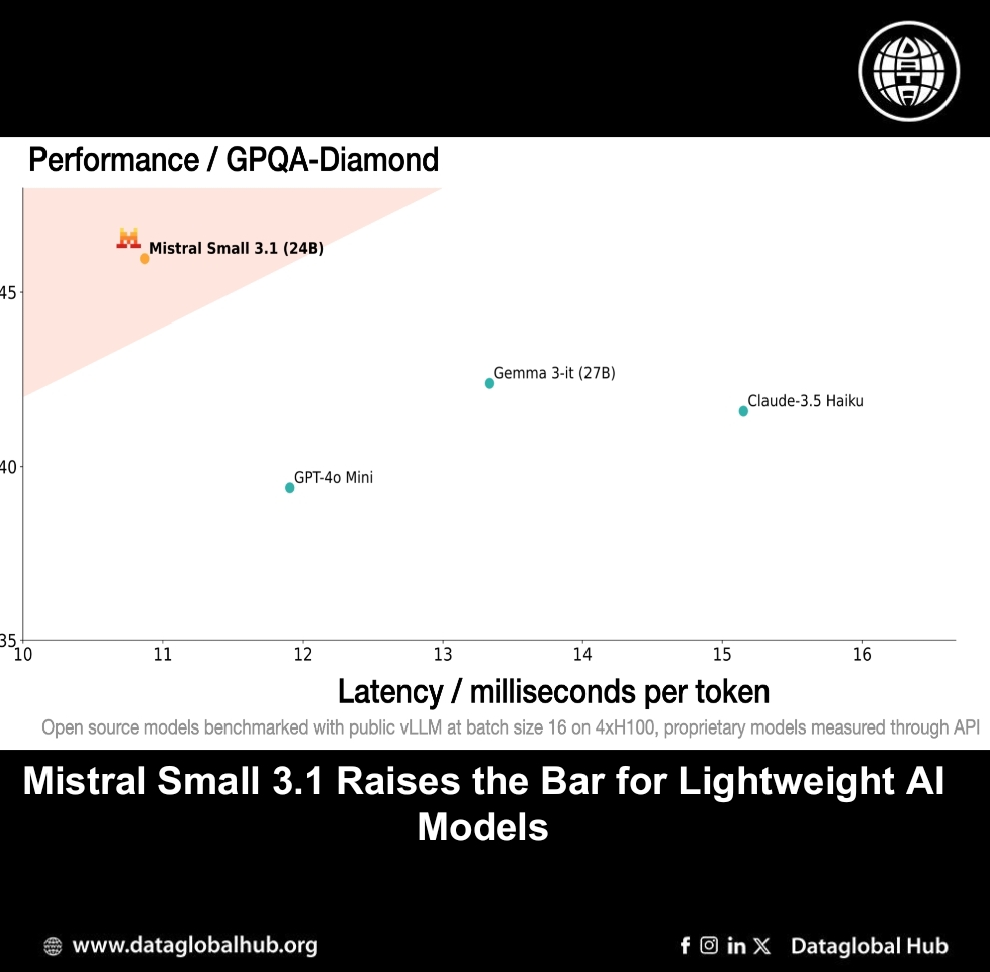

Mistral has just released Mistral Small 3.1, a model that sets a new benchmark for performance in its class. Building on the success of Mistral Small 3, this latest version offers improved text capabilities, enhanced multimodal understanding, and an expanded context window of up to 128k tokens. Despite its relatively compact size (24B parameters), it outperforms competitors like Gemma 3, GPT-4o Mini, and Cohere Aya-Vision in multiple benchmarks. It also boasts impressive inference speeds of 150 tokens per second.

Key Features and Capabilities

Performance Highlights

Mistral Small 3.1 has been rigorously tested across a variety of benchmarks, and the results speak for themselves. Here’s how it stacks up against other models in its class:

Availability and Deployment

Mistral Small 3.1 can be downloaded from:

huggingface website: https://huggingface.co/mistralai/Mistral-Small-3.1-24B-Base-2503

https://huggingface.co/mistralai/Mistral-Small-3.1-24B-Instruct-2503

Mistral AI’s developer playground: https://mistral.ai/news/la-plateforme

Google Cloud Vertex AI: https://cloud.google.com/vertex-ai/generative-ai/docs/partner-models/mistral.

With this release, Mistral continues to provide an open-source alternative that balances performance, accessibility, and efficiency. For those looking to build AI-driven applications without the overhead of larger proprietary models, Mistral Small 3.1 presents a compelling option.

About the Author

Ryan Chen

Ryan Chan is an AI correspondent from Chain.

Recent Articles

Subscribe to Newsletter

Enter your email address to register to our newsletter subscription!