OpenAI Rolls Out Lockdown Mode for ChatGPT to Thwart Prompt Injection Attacks

Translate this article

OpenAI is introducing two new security features across its ChatGPT ecosystem, aimed at helping users and organizations mitigate the growing risk of prompt injection attacks.

Prompt injection, the company explains, occurs when a third party attempts to mislead a conversational AI into following malicious instructions or revealing sensitive information. As AI systems take on more complex tasks involving the web and connected apps, the security stakes rise accordingly.

Lockdown Mode for High-Risk Users

Lockdown Mode is an optional, advanced security setting designed for a small subset of highly security-conscious users—such as executives, security teams at prominent organizations, or others at elevated risk of targeted cyberattacks. The company emphasizes that it is not necessary for most users.

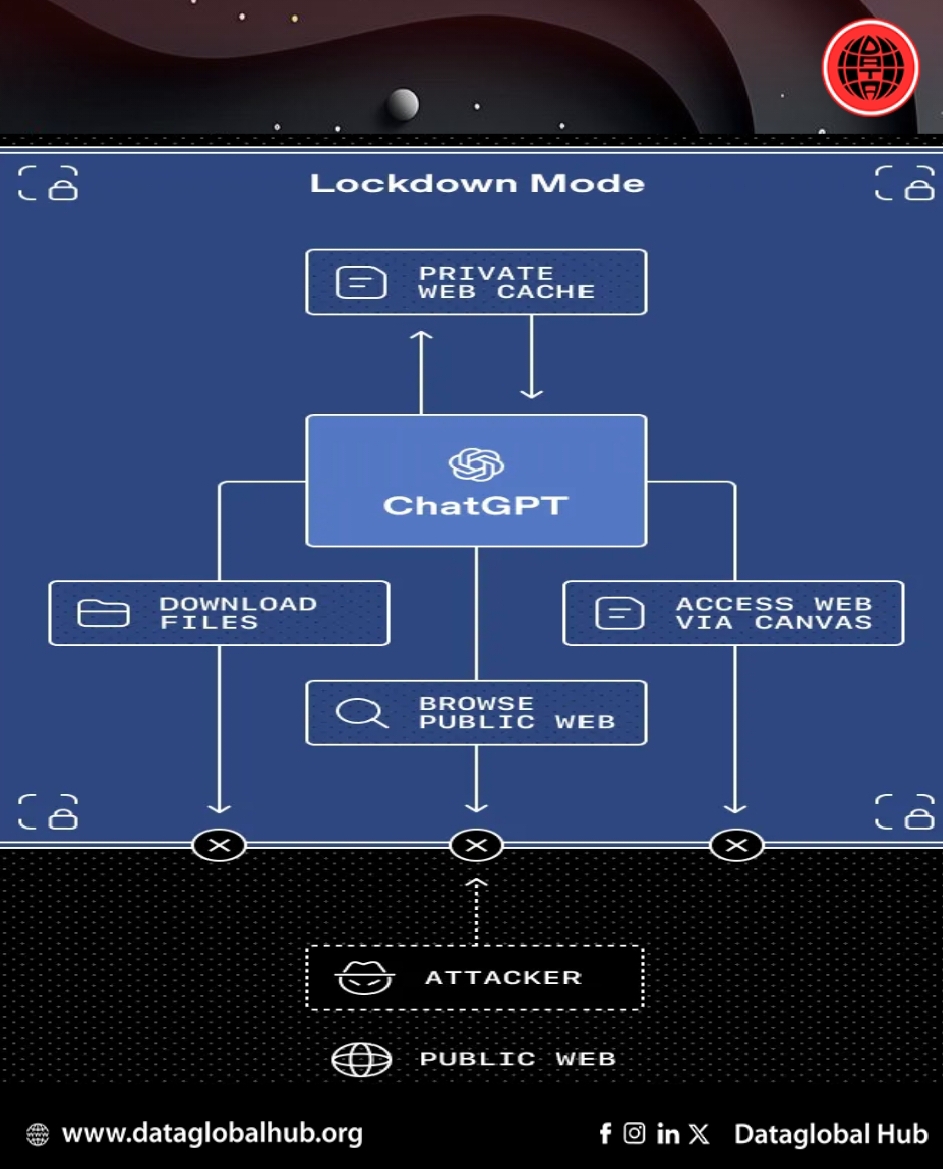

When enabled, Lockdown Mode tightly constrains how ChatGPT can interact with external systems to reduce the risk of prompt injection–based data exfiltration. Certain tools and capabilities are deterministically disabled or restricted. For example, web browsing in Lockdown Mode is limited to cached content only; no live network requests leave OpenAI's controlled network, preventing sensitive data from being exfiltrated to an attacker through browsing. Features for which OpenAI cannot provide strong deterministic guarantees of data safety are disabled entirely.

The setting is available for ChatGPT Enterprise, ChatGPT Edu, ChatGPT for Healthcare, and ChatGPT for Teachers. Admins can enable it in workspace settings by creating a new role, and the feature layers additional restrictions on top of existing enterprise controls such as role-based access and audit logs.

Workspace Admins retain granular control over which apps—and which specific actions within those apps—are available to users in Lockdown Mode. The Compliance API Logs Platform provides detailed visibility into app usage, shared data, and connected sources.

OpenAI plans to make Lockdown Mode available to consumers in the coming months.

Elevated Risk Labels

The second new feature is a standardized “Elevated Risk” label applied to certain capabilities across ChatGPT, ChatGPT Atlas, and Codex. The label is designed to help users make informed choices about features that may introduce additional security risks not yet fully addressed by industry-wide mitigations.

The label appears alongside a clear explanation of what changes, what risks may be introduced, and when that access is appropriate. In Codex, for example, developers who grant the coding assistant network access to look up documentation will see the label on the relevant settings screen.

OpenAI states that as safeguards for these features strengthen over time, the label will be removed once risks are sufficiently mitigated for general use. The company will continue to update which features carry the label.

Existing Protections

The new measures build on existing protections across model, product, and system levels, including sandboxing, URL-based data exfiltration protections, monitoring and enforcement, and enterprise controls. The announcement reinforces OpenAI's commitment to addressing emerging and growing security risks as AI systems take on increasingly complex, connected tasks.

About the Author

Liang Wei

Liang Wei is our AI correspondent from China

Recent Articles

Subscribe to Newsletter

Enter your email address to register to our newsletter subscription!