OpenAI has introduced a new series of GPT models GPT-4.1, GPT-4.1 mini, and GPT-4.1 nano and they are currently available exclusively through its API. These models deliver notable improvements in coding, instruction following, and long-context processing, offering developers more reliable and cost-effective tools for building applications. With a knowledge cutoff of June 2024 and support for up to 1 million tokens of context, the GPT-4.1 family is designed to address real-world developer needs.

Key Improvements

- Coding Performance: GPT-4.1 significantly outperforms its predecessors on coding tasks. On SWE-bench Verified, a benchmark for real-world software engineering, GPT-4.1 achieves a 54.6% success rate, compared to 33.2% for GPT-4o. It excels at generating accurate code patches, reducing extraneous edits from 9% to 2%, and adhering to diff formats, which saves time and compute resources. The model also performs better in frontend coding, with human graders preferring GPT-4.1’s web applications 80% of the time for functionality and aesthetics. Real-world testing by companies. Qodo reported GPT-4.1 outperformed competitors in 55% of GitHub pull request reviews excelling at both precision and comprehensiveness.

- Instruction Following: The GPT-4.1 models are more adept at following complex instructions, scoring 38.3% on Scale’s MultiChallenge benchmark, a 10.5% improvement over GPT-4o. They handle multi-turn conversations better, maintaining coherence and recalling prior context. On IFEval, GPT-4.1 scores 87.4%, up from 81.0% for GPT-4o, making it more reliable for tasks requiring specific formats or content. Blue J reported a 53% accuracy increase in tax scenario analysis, and Hex saw nearly double the performance on complex SQL evaluations.

- Long-Context Processing: With a 1 million-token context window up from 128,000 for GPT-4o, GPT-4.1 models can process large codebases or lengthy documents. They demonstrate strong performance in retrieving and disambiguating information across long contexts, as shown in OpenAI’s new OpenAI-MRCR evaluation. On Video-MME, GPT-4.1 scores 72.0% for long video understanding, a 6.7% improvement over GPT-4o. Thomson Reuters improved multi-document review accuracy by 17%, and Carlyle achieved 50% better financial data extraction from dense documents.

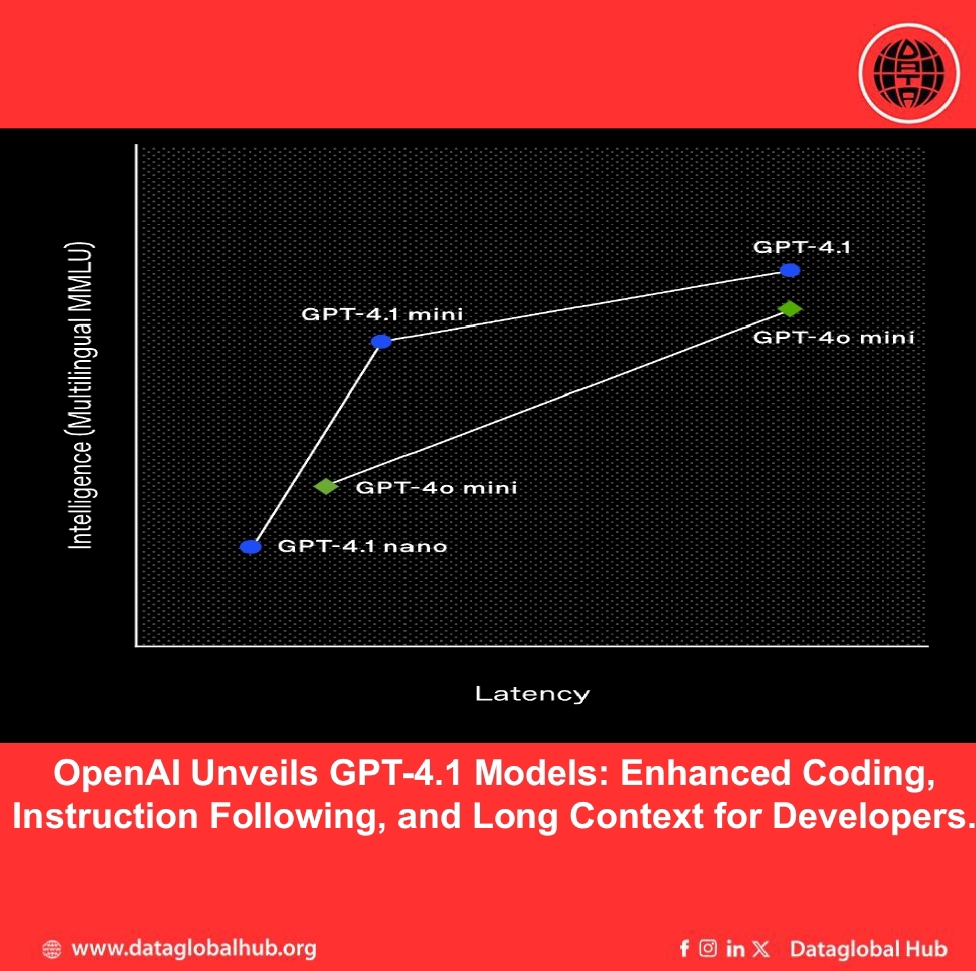

- Smaller, Faster Models: GPT-4.1 mini and nano prioritize efficiency. GPT-4.1 mini matches or exceeds GPT-4o in many benchmarks while cutting latency by nearly half and costs by 83%. GPT-4.1 nano, the fastest and cheapest model, scores 80.1% on MMLU and supports tasks like classification with its 1 million-token context window.

Pricing and Availability

The GPT-4.1 models are available now to API developers. GPT-4.1 is 26% cheaper than GPT-4o for median queries, with input at $2.00 per 1M tokens and output at $8.00. GPT-4.1 mini and nano are priced at $0.40 and $0.10 per 1M input tokens, respectively. Prompt caching discounts have increased to 75%. GPT-4.5 Preview will be turned off by July 14, 2025, as GPT-4.1 offers similar or better performance at lower cost. The model was introduced as a research preview to explore and experiment with a large, compute-intensive model.

By focusing on performance, reliability, and cost, OpenAI is enabling a wide range of applications, from legal research to financial analysis. Developers can explore these capabilities through the API and refer to OpenAI’s prompting guide for best practices. As the developer community continues to innovate, the GPT-4.1 series provides a robust foundation for creating intelligent, efficient systems.