Sakana Researchers Introduced DroPE, a Method to Extend AI Model Context by Removing Positional Embeddings

Translate this article

A team of researchers from Sakana AI and the University of Oxford has introduced a new method, dubbed DroPE, designed to extend the functional context window of large language models (LLMs) without the need for expensive, long-context retraining.

The research addresses a core limitation in current transformer-based models like ChatGPT and Llama. While these models use positional embeddings (specifically RoPE - Rotary Positional Embeddings) to understand the order of words during training, these same embeddings become a bottleneck. When prompts exceed the model's original training length, performance and coherence often degrade significantly.

The Core Idea: A Training Scaffold

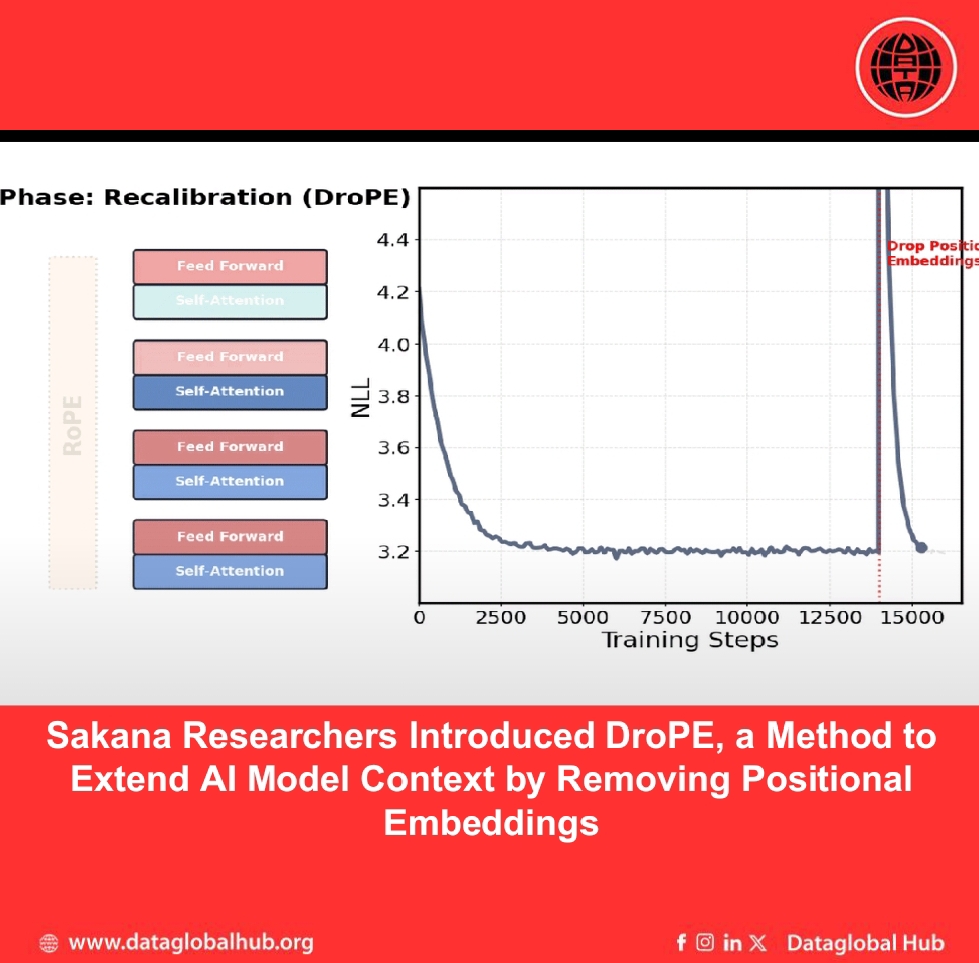

The proposed method, DroPE (Dropping Positional Embeddings), treats positional embeddings as a temporary scaffold. According to the technical report published in January 2026, the process involves two key steps after initial pretraining:

1. Removing the RoPE positional embeddings from the fully pretrained model.

2. Recalibrating the model with a short continuation of training on standard-length data (using the original context window).

This brief recalibration phase, which the authors state can require as little as 0.5% of the original pretraining budget, allows the model to recover its base performance while shedding the positional encoding that limits long-context generalization.

Reported Performance and Evaluation

The research paper claims this approach enables "seamless zero-shot context extension," meaning the adapted model can process texts much longer than it was ever specifically trained on without additional modification.

The team reports validating DroPE on models of up to 7 billion parameters, including variants of the SmolLM family and Llama2-7B, pretrained on datasets of up to a trillion tokens. Key evaluations included:

· Needle-in-a-Haystack Tests: Assessing the model's ability to retrieve specific information from very long texts. DroPE reportedly "substantially outperform" other context-extension methods.

· LongBench Benchmark: A suite of tasks like summarization and multi-document QA. The paper states DroPE improved the base SmolLM model's average score "by over 10 times" on this benchmark.

Potential Implications

If the findings hold broadly, the DroPE method could offer a more efficient path for developers to adapt existing, powerful LLMs for applications requiring analysis of long documents, lengthy transcripts, or extended codebases, without the prohibitive cost of full long-context retraining.

The authors have released the open-source code for the project and the full technical paper is available online.

About the Author

Leo Silva

Leo Silva is an Air correspondent from Brazil.

Recent Articles

Subscribe to Newsletter

Enter your email address to register to our newsletter subscription!