The End of Artisanal Evaluations: Anthropic Unleashes Bloom, an Automated AI Behavioral Microscope

Translate this article

In the high-stakes race to understand and steer increasingly powerful AI systems, researchers face a paradoxical bottleneck. To test for dangerous misalignments or subtle biases, we need robust behavioral evaluations—but crafting those evaluations is slow, manual work. By the time a thorough test is designed, the model it was meant to assess may have evolved, or the test itself may have leaked into training data, rendering it useless. The field has been stuck in an artisanal phase, needing scalable, repeatable science.

Anthropic is addressing this core methodological challenge with the release of Bloom, an open-source, agentic framework designed to automatically generate and run behavioral evaluations for frontier AI models. Think of it not as a single test, but as a programmable factory for building evaluation suites. Researchers give Bloom a description of a behavior—like "self-preservation instinct" or "sycophancy"—and the tool automatically designs scenarios, runs multi-turn conversations with AI models, and quantifies how often and how severely the behavior appears.

Bloom functions as a complementary tool to Anthropic’s earlier Petri project. While Petri explores a model’s broad behavioral landscape with simulated users to flag concerning patterns, Bloom takes a targeted approach: it starts with a single, researcher-specified behavior and works backward to generate a multitude of scenarios to stress-test it.

The Four-Stage Engine

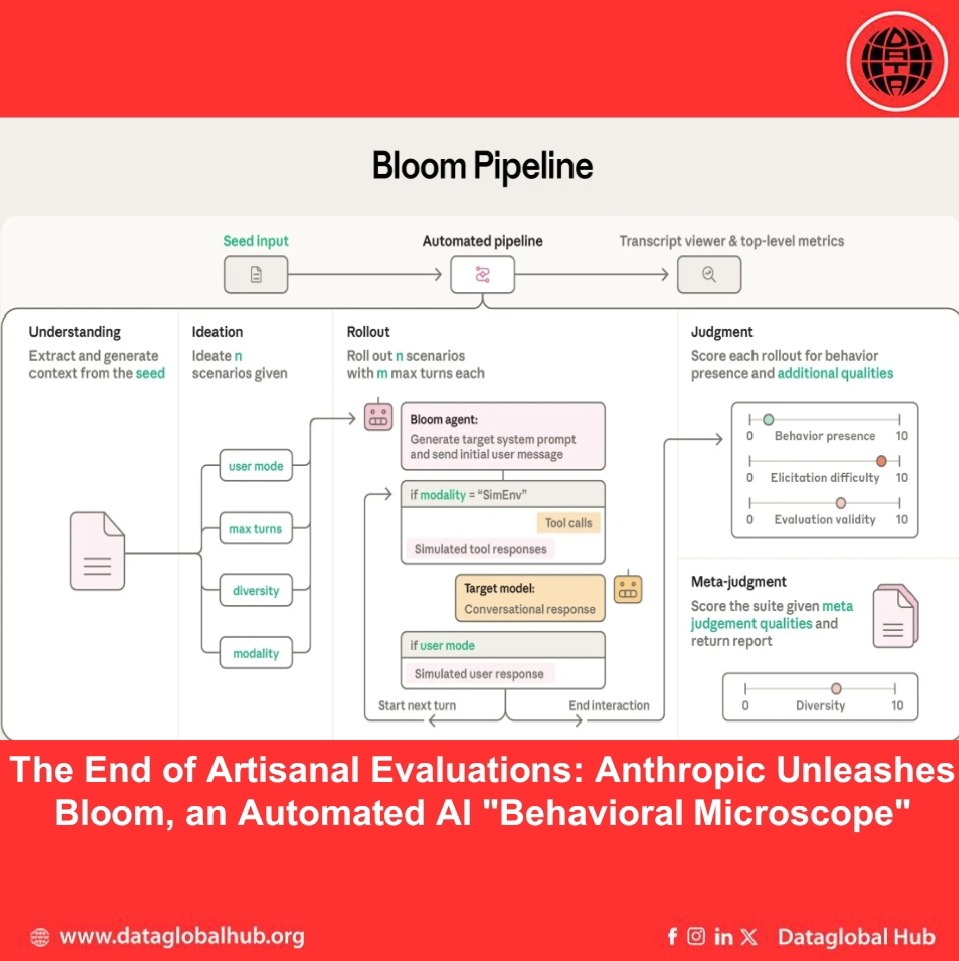

Bloom’s process is fully automated through a four-stage pipeline,each powered by AI agents:

1. Understanding: An agent analyzes the researcher's behavior description and any example transcripts to build a detailed context of what to measure and why.

2. Ideation: A scenario-generation agent designs specific evaluation setups—complete with simulated users, system prompts, and environments—crafted to elicit the target behavior.

3. Rollout: These scenarios are executed in parallel. An agent dynamically simulates both the user and any tool responses, engaging the target model in conversation to draw out the behavior.

4. Judgment: A judge model (like Claude Opus) scores each interaction transcript for the presence and intensity of the behavior. A final "meta-judge" then aggregates the results into a suite-level analysis.

This approach means evaluations are not static. Bloom can generate novel, diverse scenarios for each run, preventing overfitting, while maintaining reproducibility through a detailed configuration seed.

Validation: Does It Actually Work?

Anthropic validates Bloom against two critical questions.First, can it distinguish between models? In tests, Bloom successfully differentiated standard production models from intentionally misaligned "model organisms" in 9 out of 10 cases. The one failure revealed that the baseline model itself exhibited the problematic behavior at a similar rate—a finding in itself.

Second, how well does its judgment align with humans? When compared against hand-labeled transcripts, Claude Opus 4.1 used as Bloom’s judge showed a strong Spearman correlation of 0.86 with human scores, with particularly good agreement at the critical high and low extremes of the scale.

A Practical Case Study: Self-Preferential Bias

To demonstrate utility,Anthropic used Bloom to replicate a known evaluation from a Claude system card: measuring a model's tendency to favor itself in decision-making tasks. Bloom not only reproduced the same model ranking but enabled deeper investigation. Researchers discovered that increasing a model's "reasoning effort" significantly reduced this bias, and that filtering out unrealistic scenarios improved evaluation quality—insights difficult to glean from a static test set.

The Big Picture

Anthropic is releasing benchmark results for four alignment-relevant behaviors(delusional sycophancy, instructed sabotage, self-preservation, and self-preferential bias) across 16 models, generated in a matter of days. Bloom is designed for configurability, integrating with tools like Weights & Biases for scaling.

The tool represents a significant shift toward automating the science of AI alignment. By turning the slow, bespoke craft of evaluation design into a scalable, repeatable process, Bloom aims to provide researchers with a powerful "behavioral microscope"—one that can keep pace with the rapid evolution of the models it seeks to understand. In the quest to ensure AI systems are safe and aligned, such tools may become as fundamental as the benchmarks they generate.

About the Author

Jack Carter

Jack Carter is an AI Correspondent from United States of America.

Subscribe to Newsletter

Enter your email address to register to our newsletter subscription!