Alibaba updates open-source Qwen3, rivals GPT-4o and Opus

Translate this article

Alibaba has released the Qwen3-235B-A22B-Instruct-2507, an updated version of its Qwen3-235B-A22B model, developed by the Qwen Team. This model improves performance in instruction following, logical reasoning, text comprehension, mathematics, science, coding, and tool usage. It also enhances knowledge coverage across multiple languages and improves responses for open-ended and subjective tasks, as shown by benchmark gains like 88.7 on IFEval (up from 83.2) and 87.5 on Creative Writing v3 (up from 80.4).

Key Features

The model supports long-context understanding with a capacity of 262,144 tokens, suitable for tasks requiring extensive text processing. It is optimized for use with Hugging Face Transformers (version 4.51.0 or higher) and supports deployment through frameworks like SGLang and vLLM. Deployment may require high-end hardware, such as multiple GPUs, especially for the full 262,144-token context.

Performance Highlights

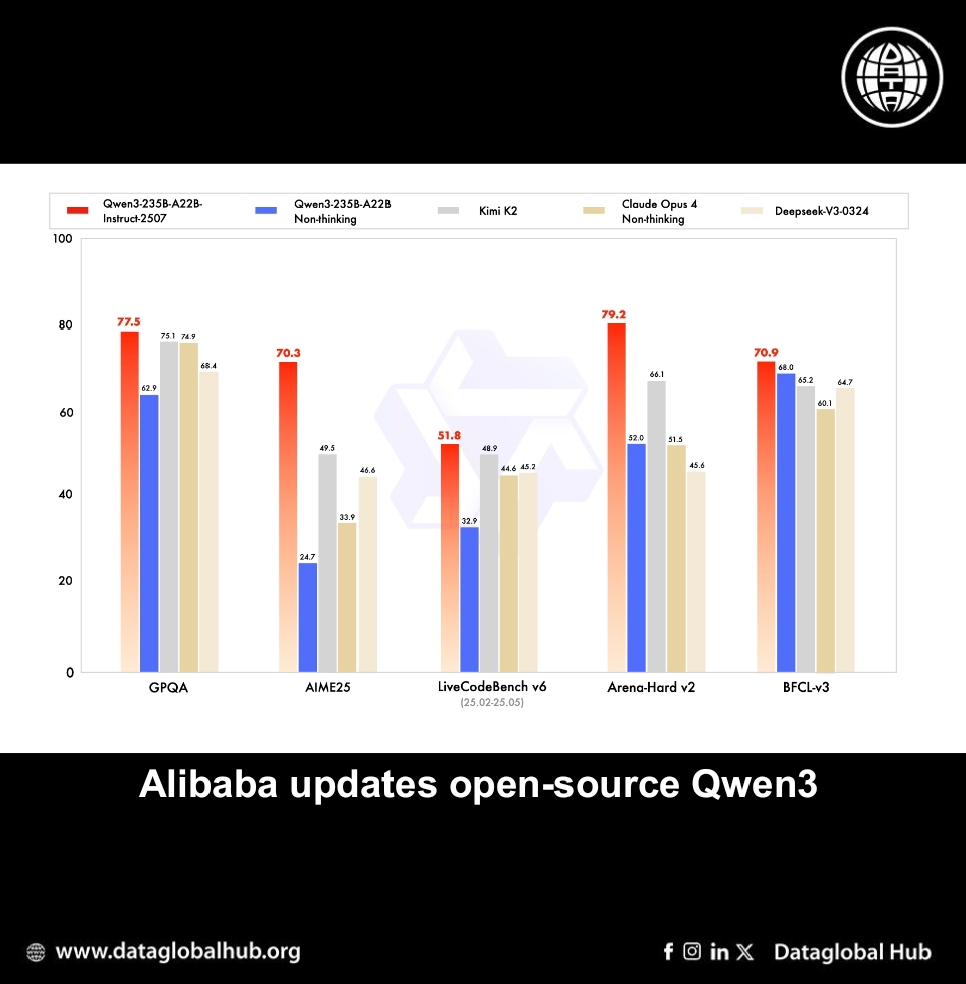

The Qwen3-235B-A22B-Instruct-2507 shows significant improvements and competes closely with models like Deepseek-V3, GPT-4o, and Claude Opus in benchmarks:

It also performs well in multilingual tasks, scoring 79.4 on MMLU-ProX (up from 73.2) and 77.5 on MultiIF (up from 70.2), alongside strong results in creative writing and agent-based tasks.

Limitations

The model operates in non-thinking mode only, meaning it does not generate internal reasoning steps. High presence_penalty settings may lead to language mixing, and full context length usage requires significant computational resources, potentially causing out-of-memory issues on standard hardware.

Availability

The model is accessible on Hugging Face’s platform, with support for various inference providers and 25 quantization options for different hardware setups. Users can explore it through Hugging Face’s Playground or community-driven spaces.

For more details, including benchmark results and hardware requirements, visit the Qwen3 Technical Report or Hugging Face’s model page.

About the Author

Simba Gondo

Recent Articles

Subscribe to Newsletter

Enter your email address to register to our newsletter subscription!