DeepSeek R1 Model Upgraded with Enhanced Reasoning Capabilities

Translate this article

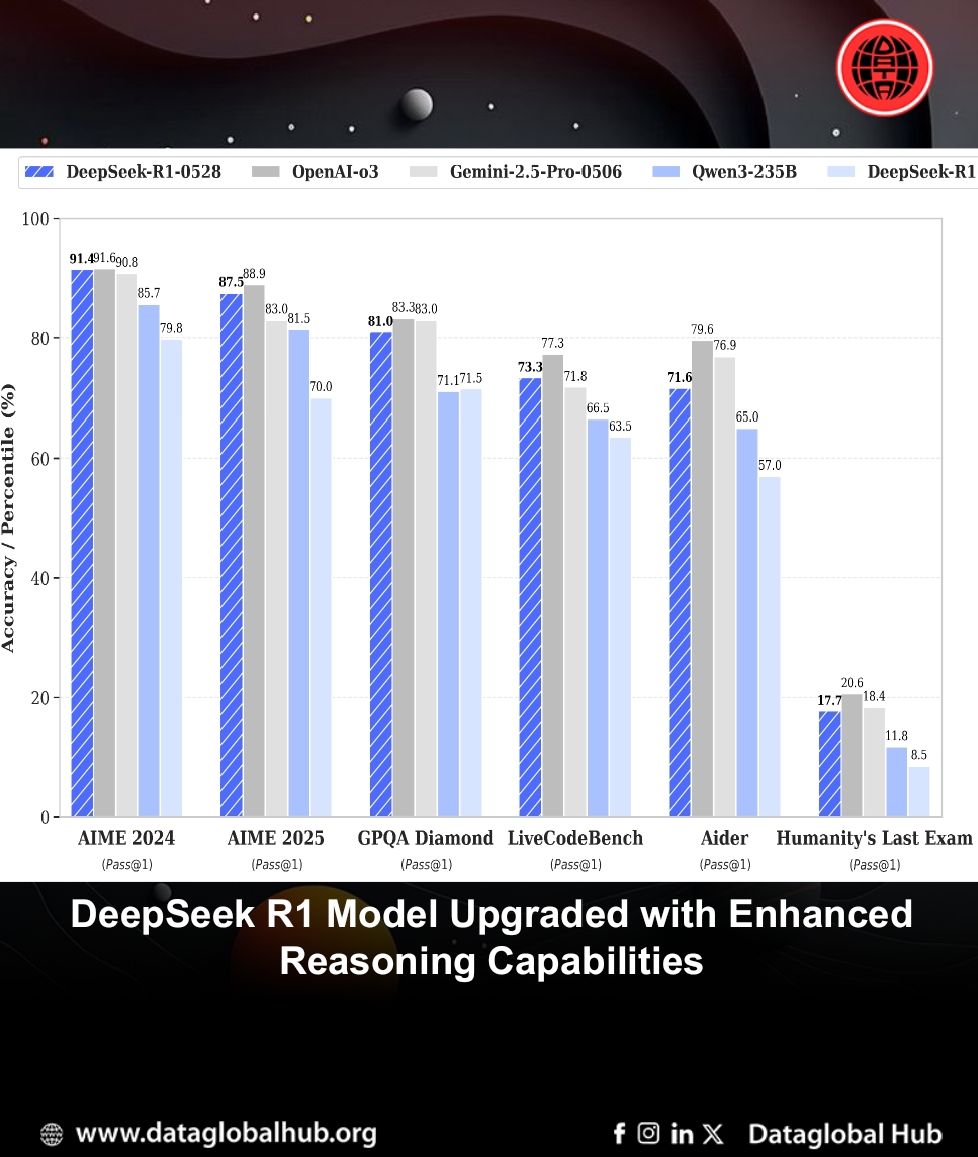

DeepSeek has released an update to its R1 model, now versioned as DeepSeek-R1-0528, with notable improvements in reasoning and performance across multiple benchmarks. This update focuses on strengthening the model’s ability to handle complex tasks in mathematics, programming, and general logic, positioning it as a strong contender among leading AI models.

Key Improvements in DeepSeek-R1-0528

The updated model leverages increased computational resources and optimized algorithms during post-training, resulting in significant performance gains. For example, in the AIME 2025 test, the model’s accuracy improved from 70% in the previous version to 87.5% in the current one. This enhancement is attributed to deeper reasoning processes, with the model now using an average of 23,000 tokens per question compared to 12,000 previously.

Beyond reasoning, the update reduces hallucination rates, improves function-calling capabilities, and enhances support for coding tasks. The model also supports a system prompt, eliminating the need for specific tags to initiate reasoning patterns.

Benchmark Performance

DeepSeek-R1-0528 demonstrates strong results across various benchmarks:

These metrics highlight the model’s improved ability to tackle diverse and complex tasks with greater accuracy.

Distilled Model: DeepSeek-R1-0528-Qwen3-8B

DeepSeek also introduced a distilled version, DeepSeek-R1-0528-Qwen3-8B, created by applying chain-of-thought techniques from DeepSeek-R1-0528 to Qwen3 8B Base. This model achieves top performance among open-source models on AIME 2024, scoring 86.0%—a 10% improvement over Qwen3 8B and comparable to the larger Qwen3-235B-thinking model. It shares the same tokenizer configuration as DeepSeek-R1-0528 and can be run similarly to Qwen3-8B.

Licensing and Accessibility

DeepSeek-R1-0528 and its distilled variant are licensed under the MIT License, supporting both commercial use and distillation. The model, with 685 billion parameters, is available in BF16, F8_E4M3, and F32 tensor types.

The updates to DeepSeek-R1-0528 reflect a meaningful step forward in AI model development, particularly in reasoning and task-specific performance. For developers, researchers, and businesses, these improvements offer a robust tool for tackling complex problems in coding, mathematics, and general knowledge tasks. The distilled Qwen3-8B model further expands access to high-performance AI for smaller-scale applications.

For more details, visit DeepSeek’s official website or refer to the research paper at arXiv

About the Author

Jack Carter

Jack Carter is an AI Correspondent from United States of America.

Recent Articles

Subscribe to Newsletter

Enter your email address to register to our newsletter subscription!