How Claude's Multi-Agent Research System Was Built and What It Reveals About the Future of AI Workflows

Translate this article

Anthropic has offered an in-depth look at the engineering behind its Claude Research feature, a multi-agent system designed to tackle complex, open-ended research tasks by coordinating multiple Claude models, each assigned specific roles.

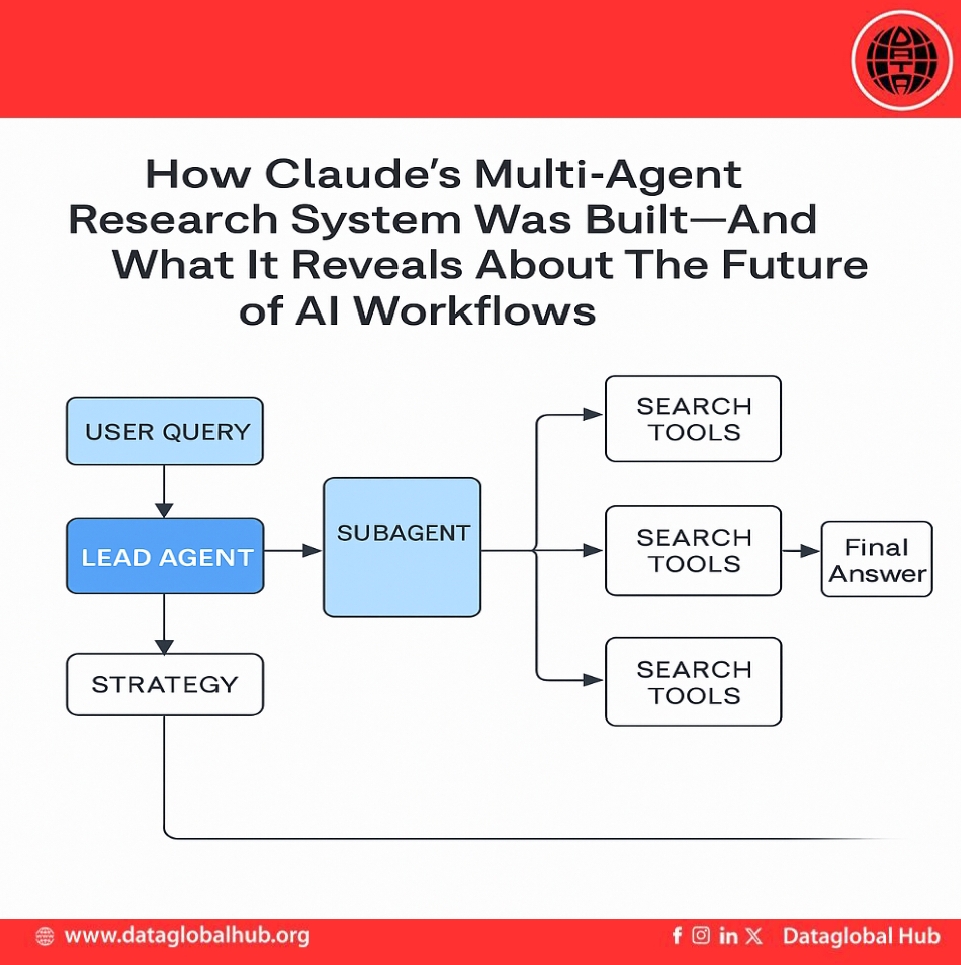

At the heart of this system is an orchestrator-worker pattern. A lead agent (Claude Opus 4) receives a user query, develops a research strategy, and spawns subagents (typically Claude Sonnet 4) to search for different aspects of the topic in parallel. Once the subagents return their findings, the lead agent synthesizes the results and determines whether additional investigation is needed before finalizing a response.

This architecture enables Claude to handle tasks that exceed the limitations of linear or single-agent systems. Unlike static pipelines that rely on pre-retrieved chunks of data, Claude’s multi-agent system dynamically adapts its research path as new information emerges. This flexibility is particularly well-suited for broad, investigative queries.

In internal tests, Anthropic found that this setup significantly improved performance for tasks requiring breadth-first exploration. For example, when asked to identify all board members of companies in the Information Technology sector of the S&P 500, the multi-agent setup succeeded by distributing subtasks across agents, while the single-agent version failed to produce accurate results. The company reported a 90.2% performance improvement in that evaluation.

The system’s performance gains were primarily driven by three factors: high token throughput, effective parallel tool usage, and architectural separation of context windows. Subagents explore independently within their own contexts, contributing focused results back to the lead agent. This distribution reduces context saturation and increases reasoning capacity.

However, these gains come with increased computational cost. According to Anthropic, the Research feature typically uses 4× more tokens than standard chat interactions, and multi-agent queries can consume up to 15× more. As a result, the approach is best suited for high-value tasks where the added performance outweighs the cost.

To improve coordination and reduce redundancy between agents, Anthropic leaned heavily on prompt engineering. The team found that carefully crafting instructions for both the lead and subagents helped avoid early issues like duplicate searches, vague objectives, or inefficient tool use. They also embedded scaling heuristics into prompts, allowing the system to dynamically adjust the number of subagents and effort based on task complexity.

Tool design also played a critical role. Agents were guided with heuristics for selecting the right tool for each job—whether searching the web, scanning documents, or using integrated systems. Additionally, Claude models were used to refine tool descriptions and test usability, resulting in improved task completion times.

The Claude Research system includes a CitationAgent that processes the collected information and links claims to their sources. This step ensures that generated responses are traceable and verifiable an important factor in research applications.

To monitor and refine agent performance, Anthropic employed both automated and human evaluations. An internal LLM-based judge was used to grade outputs on dimensions such as factual accuracy, source quality, and completeness. Human testers complemented this with real-world usage, catching subtle issues such as reliance on SEO-optimized sources over more authoritative materials.

Production deployment introduced its own challenges. Because agents are stateful and run across many turns, the system was designed to resume progress after errors and adapt to changing conditions without starting from scratch. Engineers also introduced “rainbow deployments” to gradually roll out updates without disrupting ongoing agent sessions.

Looking forward, Anthropic notes that asynchronous execution where agents can operate independently without waiting for others to complete, could unlock further efficiency. However, this would require additional advances in coordination and state management.

In its current form, the Claude Research feature demonstrates a practical application of multi-agent AI systems to large-scale, complex tasks. Rather than trying to make a single model do everything, Anthropic’s approach divides the work across multiple agents, each tuned to specific subtasks with distinct context windows and roles.

While the architecture is not universally applicable for instance, it's less suited to tightly interdependent tasks like coding—it shows strong promise in domains that benefit from parallel exploration, large context requirements, and source-driven reasoning.

About the Author

Ryan Chen

Ryan Chan is an AI correspondent from Chain.

Recent Articles

Subscribe to Newsletter

Enter your email address to register to our newsletter subscription!