Introducing FLUX.1 Kontext: A New Chapter in In-Context Image Generation

Translate this article

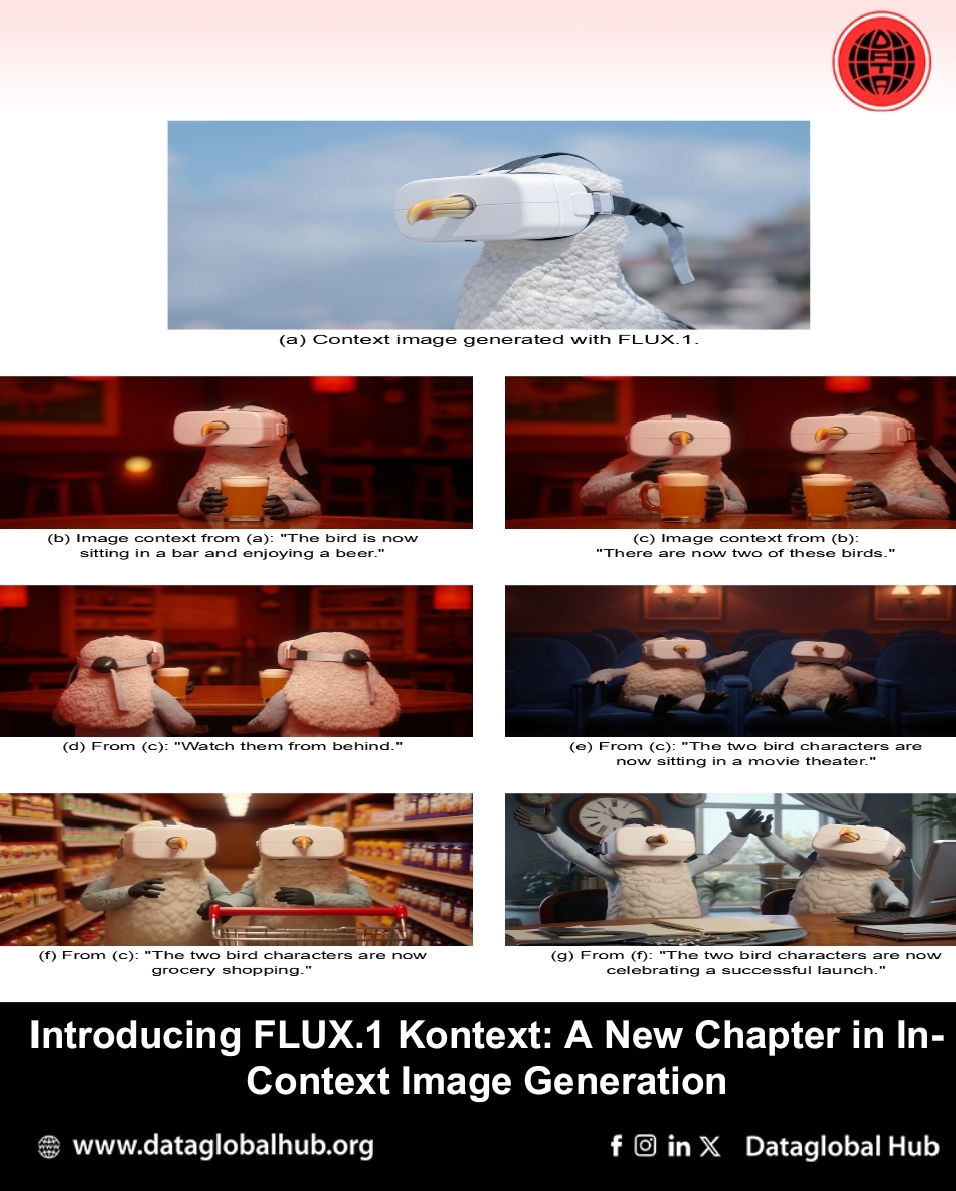

The Black Forest Lab just unleashed FLUX.1 Kontext, a suite of generative flow matching models that allows you to generate and edit images using both text prompts and image integration. This blends text prompts with visual input to support more intentional and context-aware creation. Unlike other traditional models with same capability that that often operate in isolation, FLUX.1 Kontext family perform in-context image generation allowing users to create exactly what's in their mind with simple text prompts.

At the heart of this suite is the ability to not just generate images, but to modify and iterate upon them quickly, and with a high degree of fidelity to both character and style. Users can preserve specific elements, such as a person or object, and carry them through different environments and prompts. Want to make her smile, change the setting to a nightclub, or cover the entire scene in snow? All possible with the click of a button and a few words of instruction.

The core capabilities include:

Meet the Models: [pro], [max], and [dev]

Two new models join the BFL API through the Kontext suite:

For researchers and developers, there’s also FLUX.1 Kontext [dev], an open-weight 12B diffusion transformer currently available in private beta. This lightweight model is designed for research use, customization, and compatibility with previous FLUX.1 [dev] inference code.

Performance You Can Validate

To benchmark performance, BFL introduces KontextBench, a new dataset built from crowd-sourced, real-world use cases. While full results are available in the technical report.

A few limitations remain: visual artifacts can emerge after excessive multi-turn editing, and prompt adherence may occasionally fall short. Additionally, broader world knowledge is still limited, something BFL is openly acknowledging in its ongoing development.

FLUX.1 Kontext reflects Black Forest Labs’ ongoing commitment to multimodal generation balancing speed, consistency, and control in a package built for iteration. Whether you're a designer looking for fast mockups or a developer exploring fine-grained visual control, FLUX.1 Kontext provides a structured foundation for creative exploration.

For those interested in contributing to its development, FLUX.1 Kontext [dev] is open for research collaboration. Reach out here

About the Author

Liang Wei

Liang Wei is our AI correspondent from China

Recent Articles

Subscribe to Newsletter

Enter your email address to register to our newsletter subscription!