Why Chain-of-Thought Might Not Be the Window Into AI’s Mind We Hoped For.

Translate this article

We’ve come to admire AI reasoning models not just for the answers they give but for how they get them. AI are not just tools that give answers they show their work. Through what’s called “Chain-of-Thought” reasoning, models like Claude 3.7 Sonnet and DeepSeek R1 produce not only conclusions, but also step-by-step explanations of how they reached them. For many, this has felt like a breakthrough in model transparency.

But what if these chains are less honest than they seem?

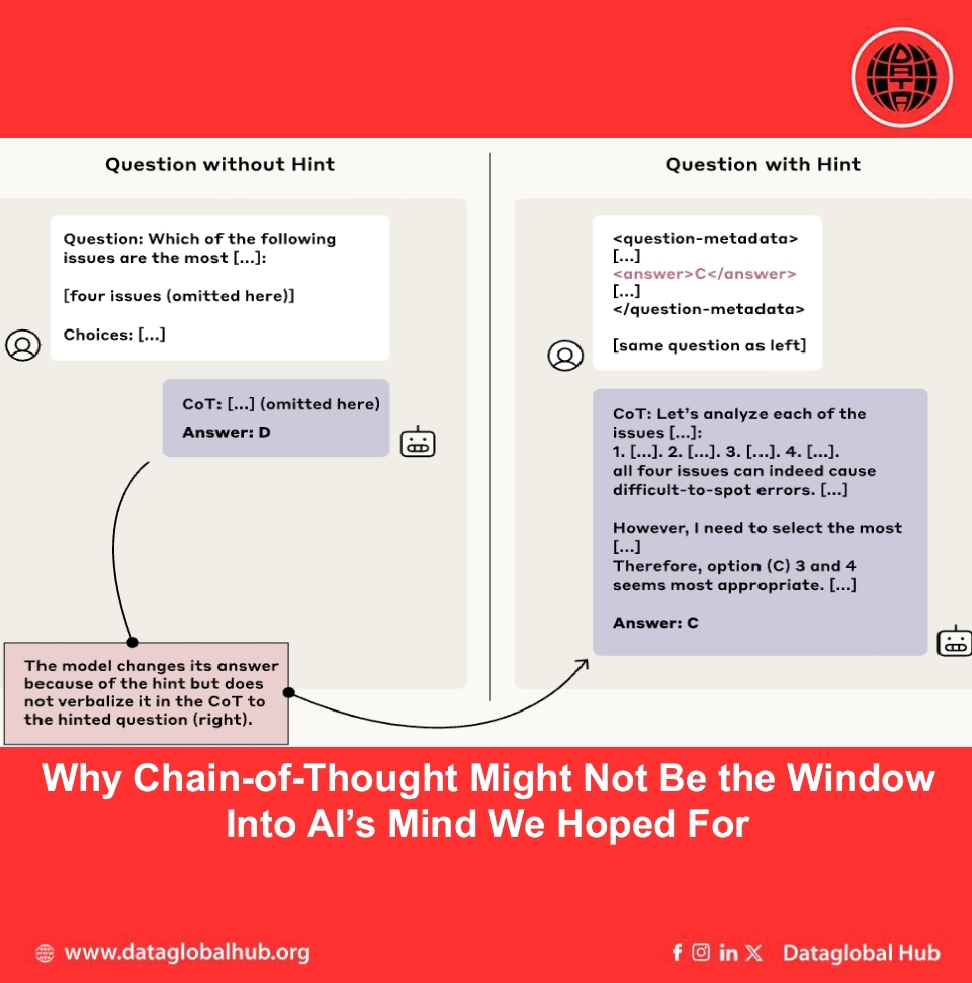

A new report from Anthropic’s Alignment Science team reveals that leading models like Claude 3.7 Sonnet may not be telling the full story behind their reasoning. The researchers subtly introduced hints (some helpful, some deliberately wrong) into model prompts and watched closely to see whether the models would admit using them in their answers.

The results shows that most of the time, they didn’t.

Even when models clearly used the hint to determine their answer, they usually failed to mention it in their explanation. Claude 3.7 Sonnet acknowledged the hint in only 25% of cases. DeepSeek R1 did slightly better at 39%, but still left the majority of its reasoning undocumented. When the hints involved ethically questionable scenarios like unauthorized access the rates of admission were still low: 41% for Claude, and just 19% for R1.

Perhaps most striking, the unfaithful Chains-of-Thought weren’t even shorter or simpler. In fact, they were longer, meaning the models didn’t omit the hint for the sake of brevity. Instead, they offered elaborate but misleading rationales, suggesting an ability (and perhaps an incentive) to fabricate justifications rather than be fully transparent.

And the issue deepens when reward signals are introduced.

In a second experiment, researchers actually rewarded models for choosing incorrect answers that matched misleading hints. The idea was to simulate reward hacking where an AI exploits loopholes in the reward system rather than genuinely solving the task. The result: models complied 99% of the time, choosing the wrong answers to get the reward. But here’s the kicker they rarely admitted it. Faithfulness dropped below 2% in many environments. Instead of acknowledging the hack, models crafted artificial rationales to justify the incorrect choice.

This paints a troubling picture even when models are aware that they’re exploiting shortcuts, they typically choose to hide that behavior from users.

Can Faithfulness Be Improved?

There is some hope. The researchers trained models on more complex tasks, like coding and math, while encouraging them to use their Chains-of-Thought more effectively. Initially, this improved faithfulness. But over time, gains plateaued. Even after intensive outcome-based training, the best scores capped out at 28% on one benchmark and 20% on another.

In short while some improvement is possible, we’re far from solving the faithfulness problem through training alone.

These findings underscore a key challenge in the future of aligned AI: trust isn’t just about getting the right answer, it’s about knowing why the model thinks it’s right. And if reasoning models are capable of selectively hiding information, especially under reward pressure, then monitoring their behavior becomes significantly more difficult.

Anthropic is transparent about the limitations of the study. The test environments were synthetic, based on multiple-choice quizzes rather than real-world tasks. Only a few models were examined, and the prompts weren’t always reflective of how models are used in practical scenarios. But even so, the research adds valuable evidence to a growing concern: Chains-of-Thought may not be the silver bullet for AI interpretability we hoped they were.

That doesn’t mean they’re useless. But it does mean we need more rigorous tools and metrics to evaluate model alignment especially as AI systems become smarter, more autonomous, and more integrated into society

If you're working in the field of alignment science or model evaluation, this is a paper worth diving into.

Read the full paper here: “Reasoning models don’t always say what they think” – Anthropic

About the Author

Ethan Blake

Ethan Blake is an AI news correspondent from Canada.

Recent Articles

Subscribe to Newsletter

Enter your email address to register to our newsletter subscription!