Google's New AI Breakthrough Gives Developers an Unfair Advantage: Beating Models 10x Its Size with Smarter, Step-by-Step Training

Translate this article

A research team from Google Cloud AI and UCLA has introduced a new way to train AI models that helps smaller, more efficient systems tackle complex, multi-step problems previously out of their reach.

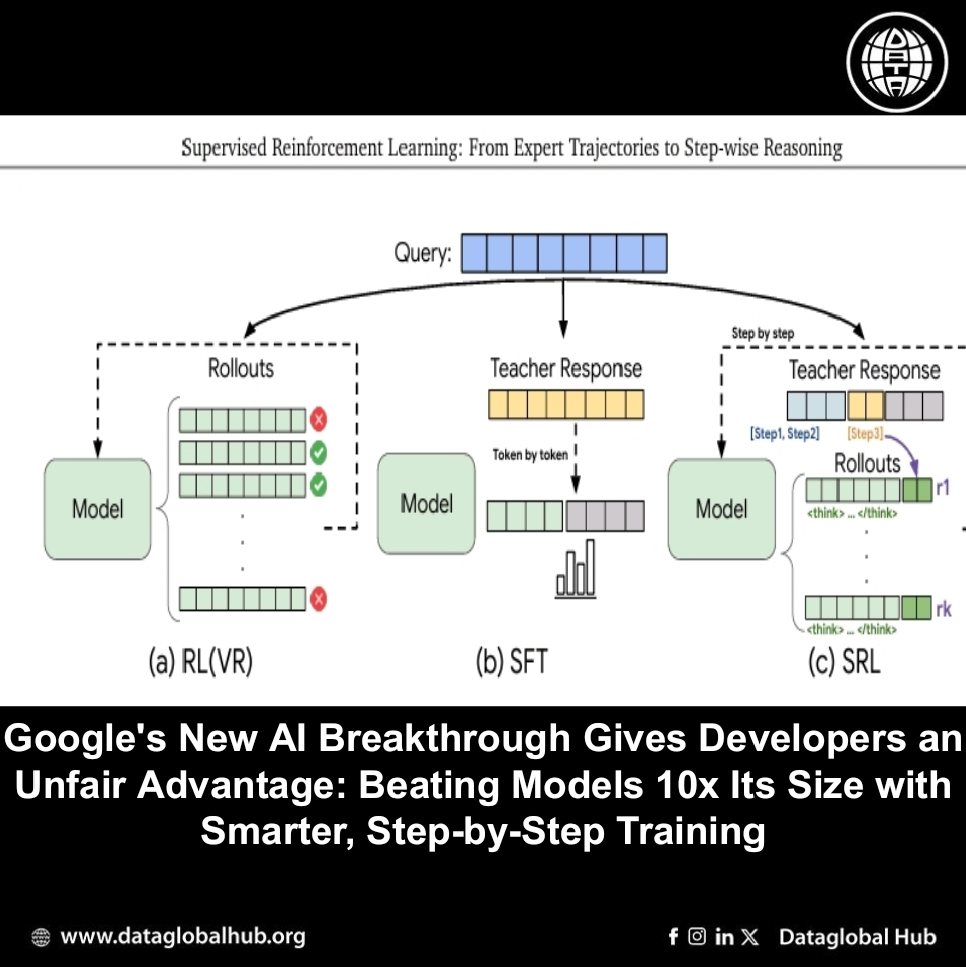

The method, named Supervised Reinforcement Learning (SRL), addresses a core challenge in AI development: teaching models the "how" of reasoning, not just the "what" of the answer.

Traditional training often hits a wall with difficult reasoning tasks. Supervised fine-tuning can lead to models that just memorize and copy solutions without true understanding, while reinforcement learning that only rewards a correct final answer fails when the model can't accidentally stumble upon the right solution path.

SRL bridges this gap by breaking down expert problem-solving into individual steps. During training, the model is prompted to generate its own "inner monologue" of reasoning and then produce a concrete action for the next step. It then receives immediate feedback based on how closely its action matches the expert's step, creating a dense, step-by-step learning signal.

The key advantages for developers and companies are clear:

Unlocks Higher Performance: In tests, a 7-billion-parameter model trained with SRL significantly outperformed its counterparts on challenging math benchmarks (like AMC and AIME) and more than doubled the performance of a strong baseline on real-world software engineering tasks from SWE-Bench.

Creates a Powerful Training Pipeline: The research found that using SRL as an initial phase before applying standard reinforcement learning yielded the strongest overall results, creating a more effective blueprint for building capable models.

Makes Advanced Reasoning More Accessible: By providing a clearer path for models to learn complex logic, SRL enables the development of more sophisticated reasoning skills without solely relying on scaling up model size and computational budget.

This approach offers a proven path to imbue smaller, more deployable models with stronger problem-solving capabilities, providing a tangible edge in the push toward more efficient and capable AI systems.

For technical details, you can read the full paper “Supervised Reinforcement Learning: From Expert Trajectories to Step-wise Reasoning” on arXiv.

About the Author

Ryan Chen

Ryan Chan is an AI correspondent from Chain.

Recent Articles

Subscribe to Newsletter

Enter your email address to register to our newsletter subscription!